Research

Note: Below I give a high-level overview of some of my research topics. However, this page has not been updated since 2021. If you are interested in my more recent research, please see my Google Scholar page or email me.

- Thermodynamics of information

- The physics of agency, function, and meaning

- Machine learning and information theory

- Novel information-theoretic measures for complex systems

- Dissertation research

Thermodynamics of information

In the past few decades, researchers have uncovered a number of fundamental relationships between information and physics (such relationships often go by the name “thermodynamics of information”). In particular, it has been shown that any physically-embodied system that processes information – which might be anything from a biological organism to a digital computer – must pay a price using physical resources such as energy, time, or memory space.

Along with David Wolpert at the Santa Fe Institute, I have been studying the relationship between information processing and various kinds of resources, both for concrete models of computation (e.g., Turing machines and digital circuits) as well as for the kinds of general stochastic processes used in statistical physics.

Selected publications:

- A. Kolchinsky and D.H. Wolpert, “Work, entropy production, and thermodynamics of information under protocol constraints”, Physical Review X, 2021. pdf, press release

- A. Kolchinsky and D.H. Wolpert, “Thermodynamics of Turing Machines”, Physical Review Research, pdf, press release

- D.H. Wolpert, A. Kolchinsky, JA Owen, “A space–time tradeoff for implementing a function with master equation dynamics”, Nature Communications, 2019, pdf, press release

- A. Kolchinsky and D.H. Wolpert, “Dependence of dissipation on the initial distribution over states”, J Stat Mech, 2017, pdf

The physics of agency, function, and meaning

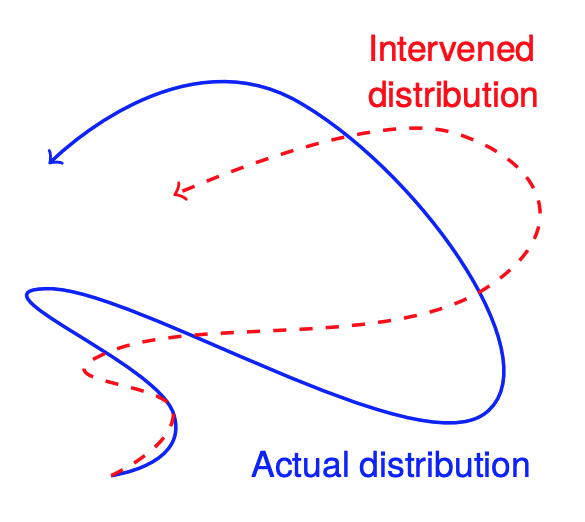

I am interested in using formal methods to understand how properties like “agency”, “function”, and “meaning” — which are so useful for understanding the behavior of living, cognitive beings — emerge from an underlying physical world that does not possess such properties. In collaboration with David Wolpert, I proposed to define and analyze “meaningful information” (also called “semantic information”) in terms of statistical correlations which are causally necessary for a physical system to remain out of equilibrium. In the future, I hope to use similar ideas to study the origin of agency, function, and meaning in concrete models of self-maintaining nonequilibrium systems, such as simple models of protocells considered in origin of life research.

Selected publications:

- A. Kolchinsky, D.H. Wolpert, “Semantic information, autonomous agency and non-equilibrium statistical physics”, Interface Focus, 2018, pdf, press release

Machine learning and information theory

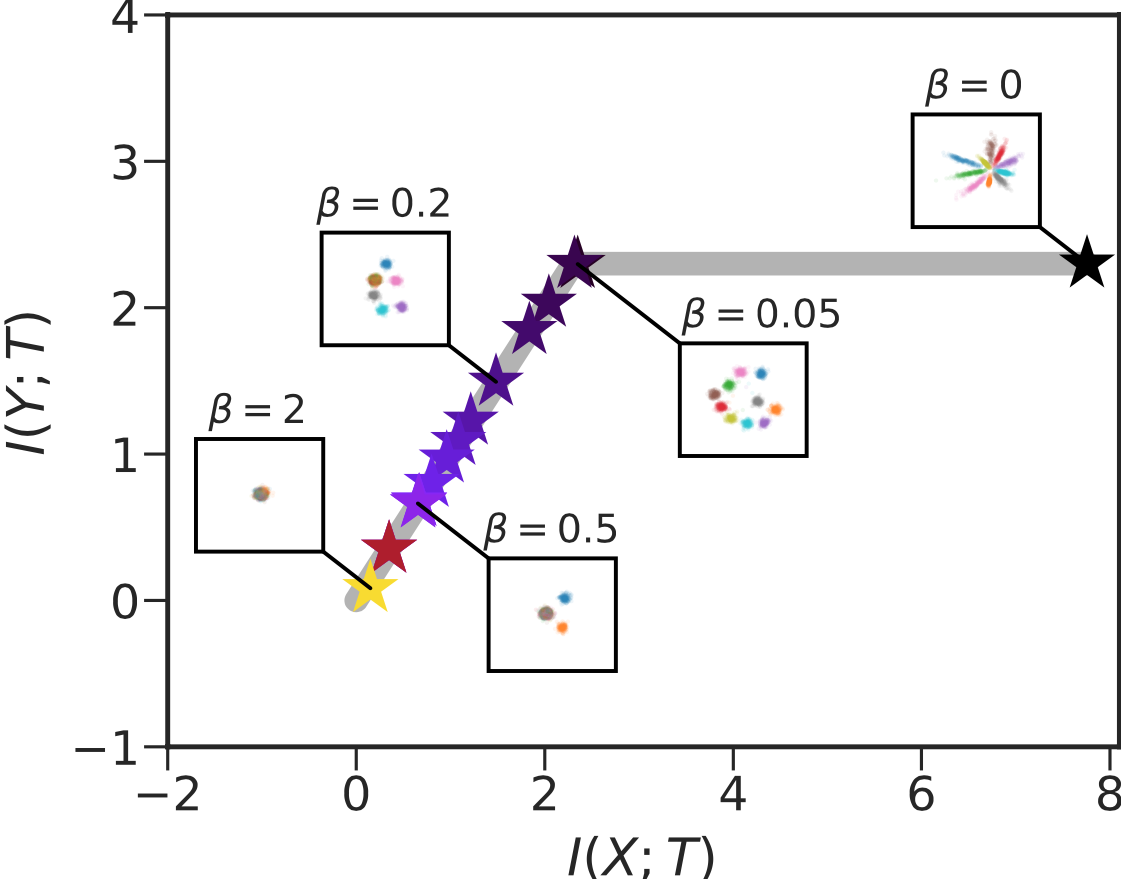

New machine learning approaches (such as deep neural networks) have demonstrated revolutionary progress in solving difficult problems. The reasons behind this recent progress are not entirely understood from a theoretical perspective. At the same time, some have suggested that insight may be provided by information theoretic ideas, including the so-called “information bottleneck” principle, which states that optimal prediction of relevant information should be balanced by optimal compression of irrelevant information.

In collaboration with Brendan Tracey and others, I have developed new techniques to accurately estimate information transfer in neural networks. We have used these techniques to train neural networks to compress away irrelevant information, as well as to analyze (and challenge) the idea that information bottleneck explains the success of recent machine learning architectures.

Selected publications:

- A. Kolchinsky, BD Tracey, S Van Kuyk, “Caveats for information bottleneck in deterministic scenarios”, ICLR, 2019, pdf

- A.M. Saxe et al., “On the information bottleneck theory of deep learning”, J Stat Mech, 2019, pdf

- A. Kolchinsky, “Nonlinear information bottleneck”, Entropy, 2019, pdf

- A. Kolchinsky, B.D. Tracey, “Estimating mixture entropy with pairwise distances”, Entropy, 2017, pdf

Novel information-theoretic measures for complex systems

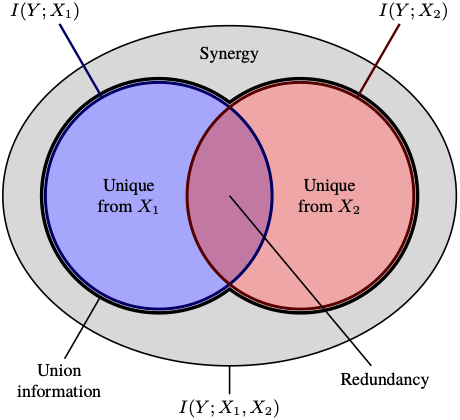

I am interested in quantifying how information is distributed and transferred in complex systems. For example, I have proposed a way of measuring how much of the information provided by a set of sources is redundant (i.e., present in all of the sources simultaneously). In another project, done in collaboration with Bernat Corominas-Murtra, we proposed a way to measure the amount of information that is correctly copied from one system to another (e.g., when a genetic sequence is copied without errors).

Selected publications:

Dissertation research

Before coming to the Santa Fe Institute, I did my PhD in complex systems and cognitive science with Luis M. Rocha at the Center for Complex Systems and Networks in Indiana University, Bloomington.

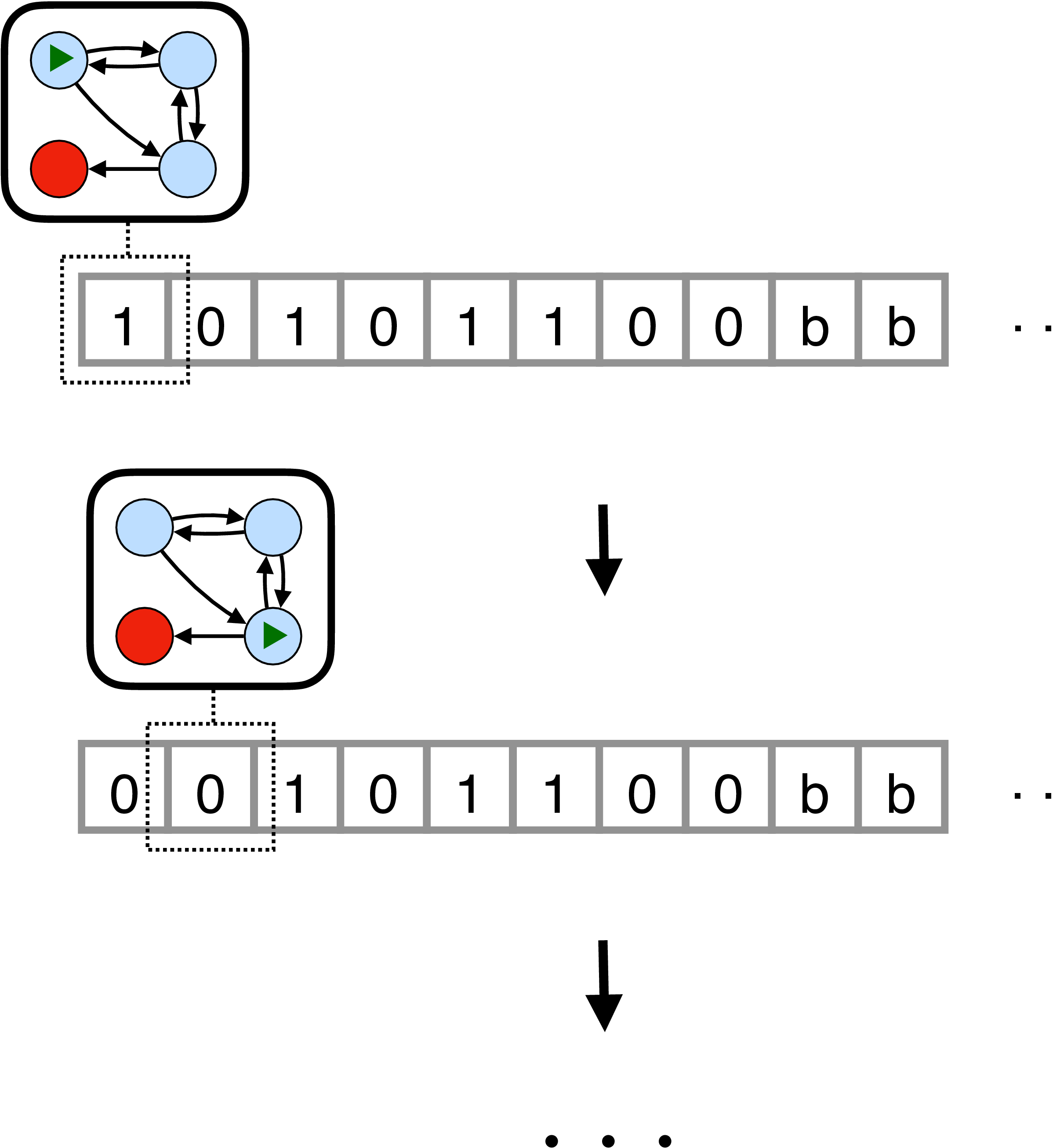

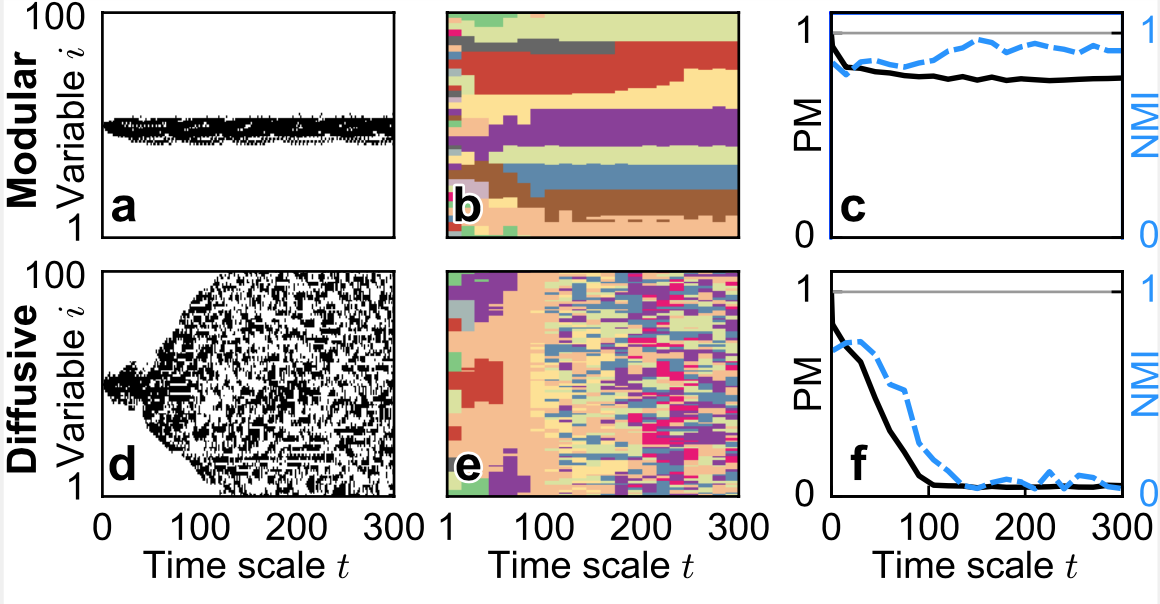

In my dissertation, I developed techniques to analyze the notion of “modularity” in distributed dynamical systems.